Research Topic

Research Topic Summarization

-

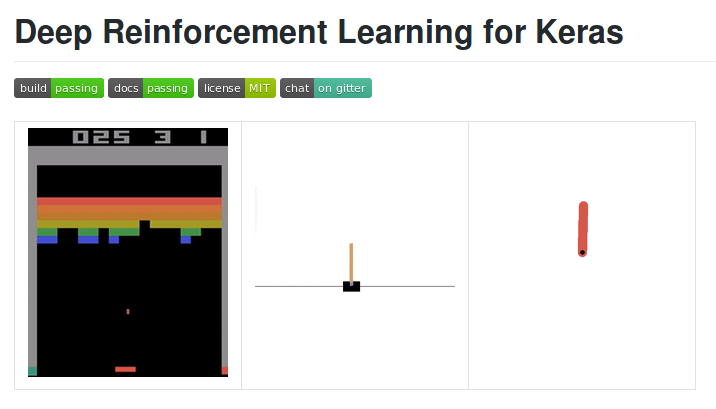

keras RL tutorial includes below topics

-

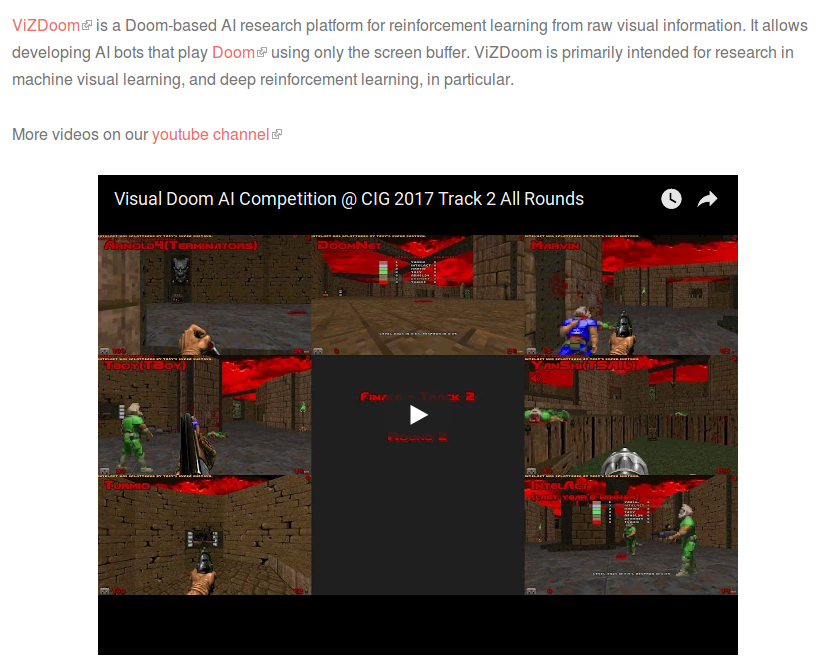

A3C Doom simulation

This iPython notebook includes an implementation of the A3C algorithm. In it we use A3C to solve a simple 3D Doom challenge using the VizDoom engine. For more information on A3C, see the accompanying Medium post.

-

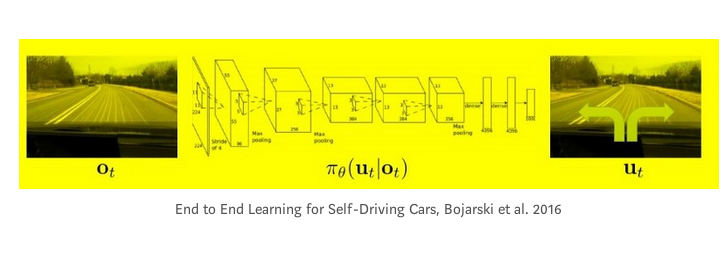

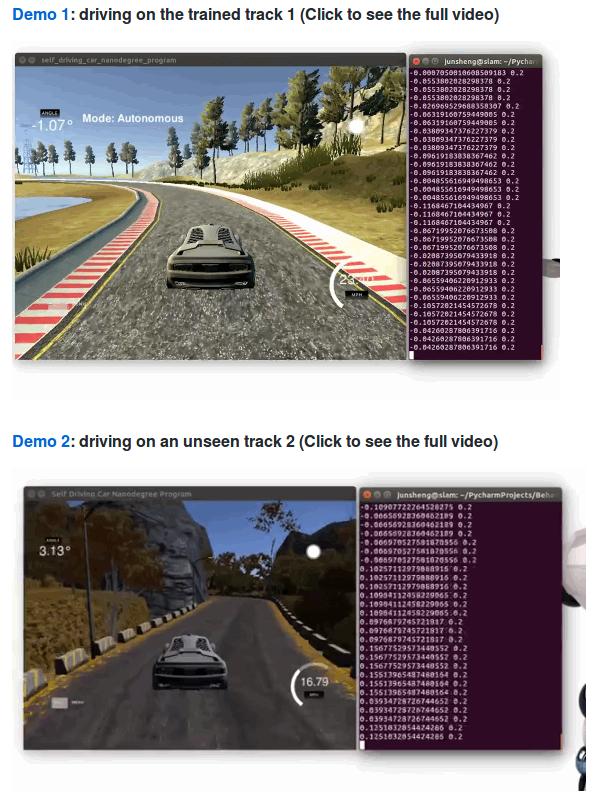

Behavioral Cloning in autonomous driving

Coded a Deep Neural Network to Steer a Car in a game simulator. Neural Network directly predicts the steering angles from the image of front camera in the car. The training data is only collected in track 1 by manually driving two laps and the neural network learns to drive the car on different tracks.

-

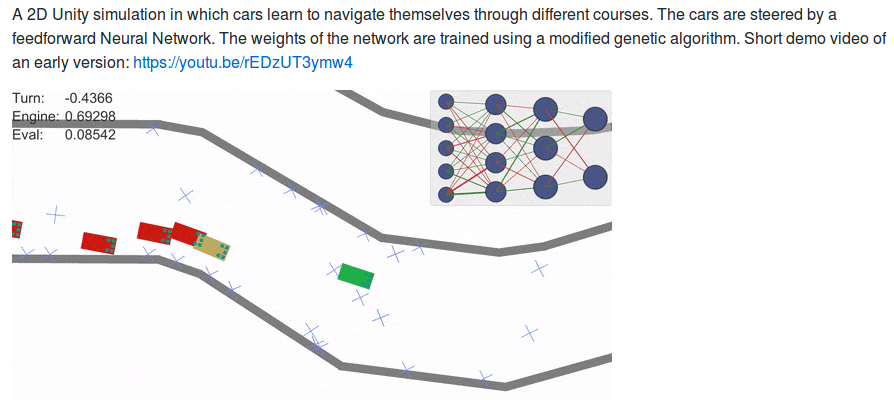

2D unity car simulation

A 2D Unity simulation in which cars learn to navigate themselves through different courses. The cars are steered by a feedforward neural network. The weights of the network are trained using a modified genetic algorithm.

-

Robotic and Deep Learning

-

Using Python programming to Play Grand Theft Auto 5

Explorations of Using Python to play Grand Theft Auto 5, mainly for the purposes of creating self-driving cars and other vehicles.

-

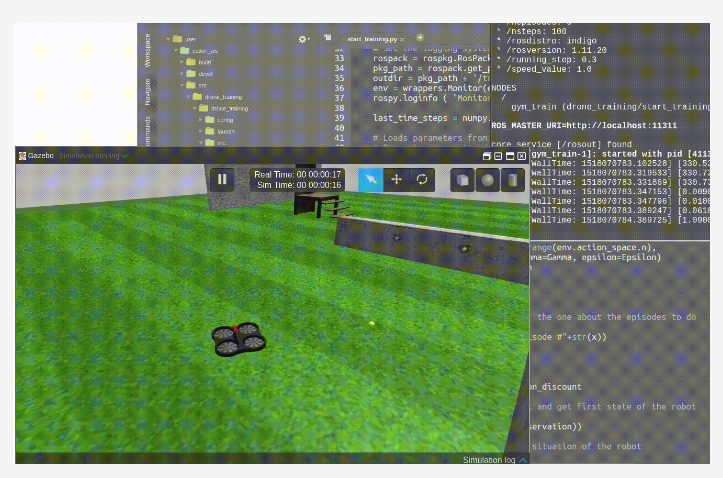

Using OpenAI with ROS

The drone training example In this example, we are going to train a ROS based drone to be able to go to a location of the space moving as low as possible (may be to avoid being detected), but avoiding obstacles in its way.

-

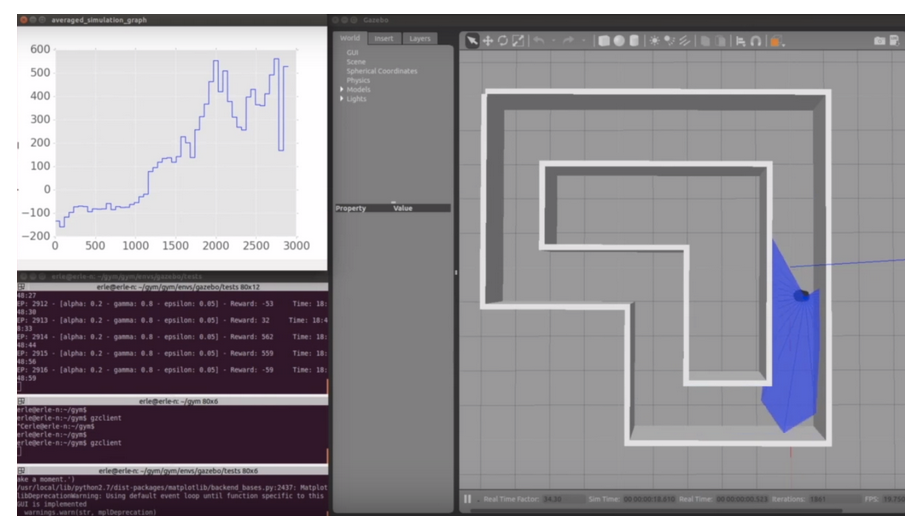

Reinforcement Learning with ROS and Gazebo

-

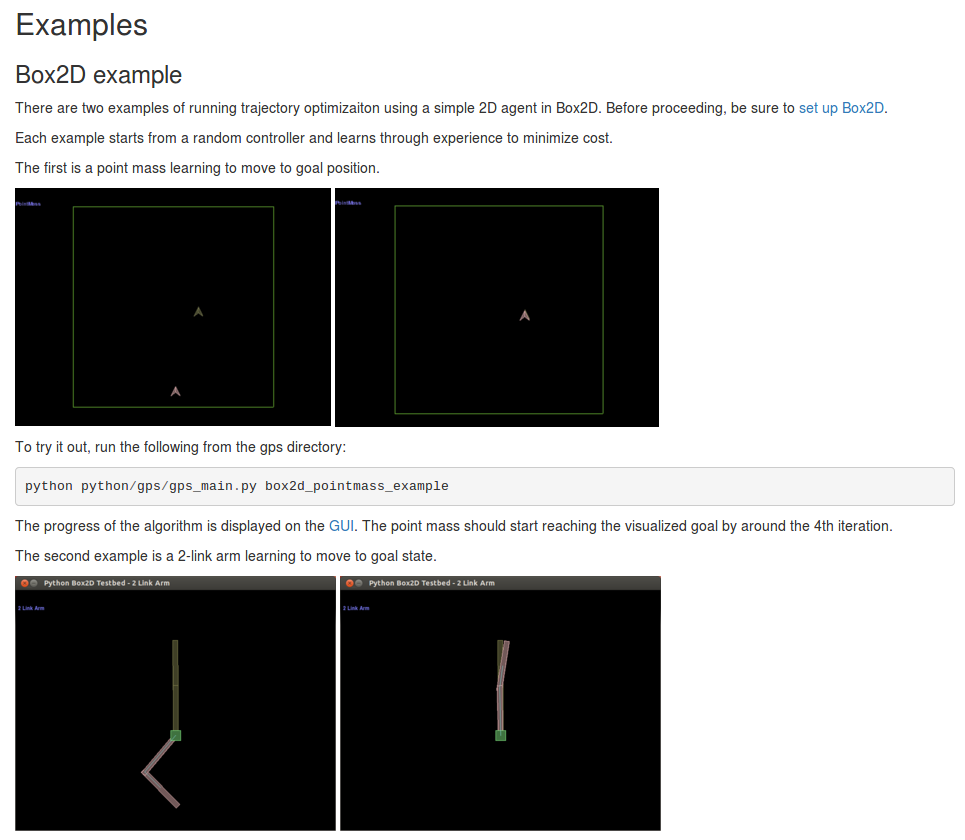

Guided Policy Search¶

This code is a reimplementation of the guided policy search algorithm and LQG-based trajectory optimization, meant to help others understand, reuse, and build upon existing work. It includes a complete robot controller and sensor interface for the PR2 robot via ROS, and an interface for simulated agents in Box2D and MuJoCo. Source code is available on GitHub.

While the core functionality is fully implemented and tested, the codebase is a work in progress. See the FAQ for information on planned future additions to the code.

-

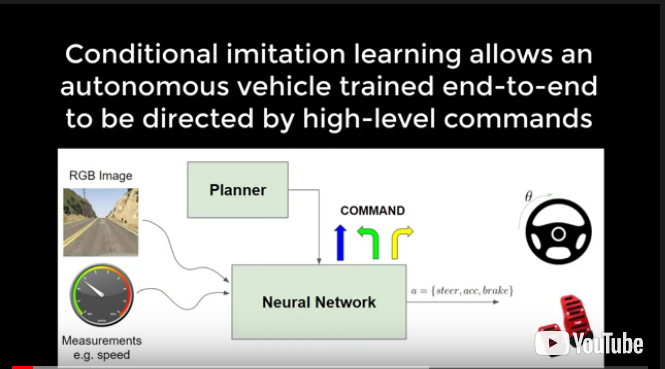

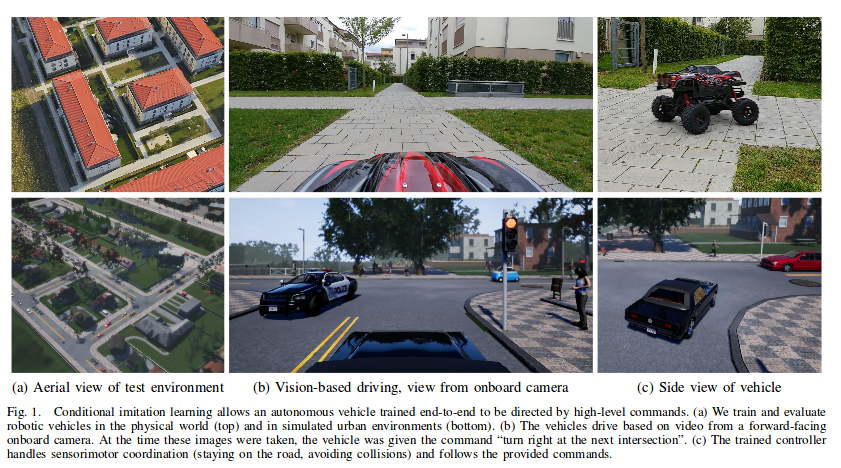

Imitation Learning Driving

End-to-end Driving via Conditional Imitation Learning

Conditional Imitation Learning at CARLA

Carla simulator

Repository to store the conditional imitation learning based AI that runs on carla. The trained model is the one used on “CARLA: An Open Urban Driving Simulator” paper.

Conditional Imitation Learning at CARLA github

Repository to store the conditional imitation learning based AI that runs on carla. The trained model is the one used on “CARLA: An Open Urban Driving Simulator” paper.

Conditional Imitation Learning at CARLA github

End-to-end Driving via Conditional Imitation Learning paper

-

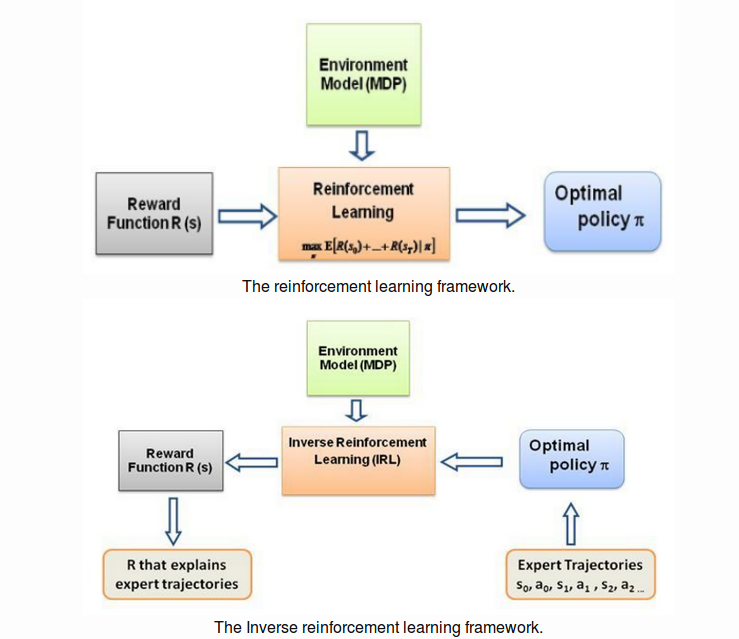

Inverse Reinforcement Learning

-

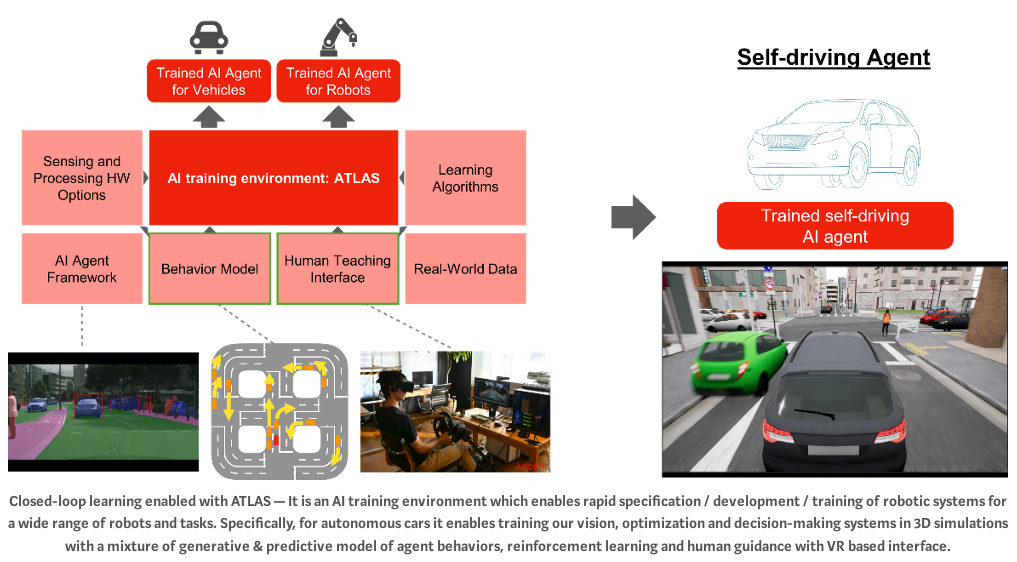

ATLAS AI training environment

-

Deep Reinforcement : Imitation Learning

-

A Course in Machine Learning

-

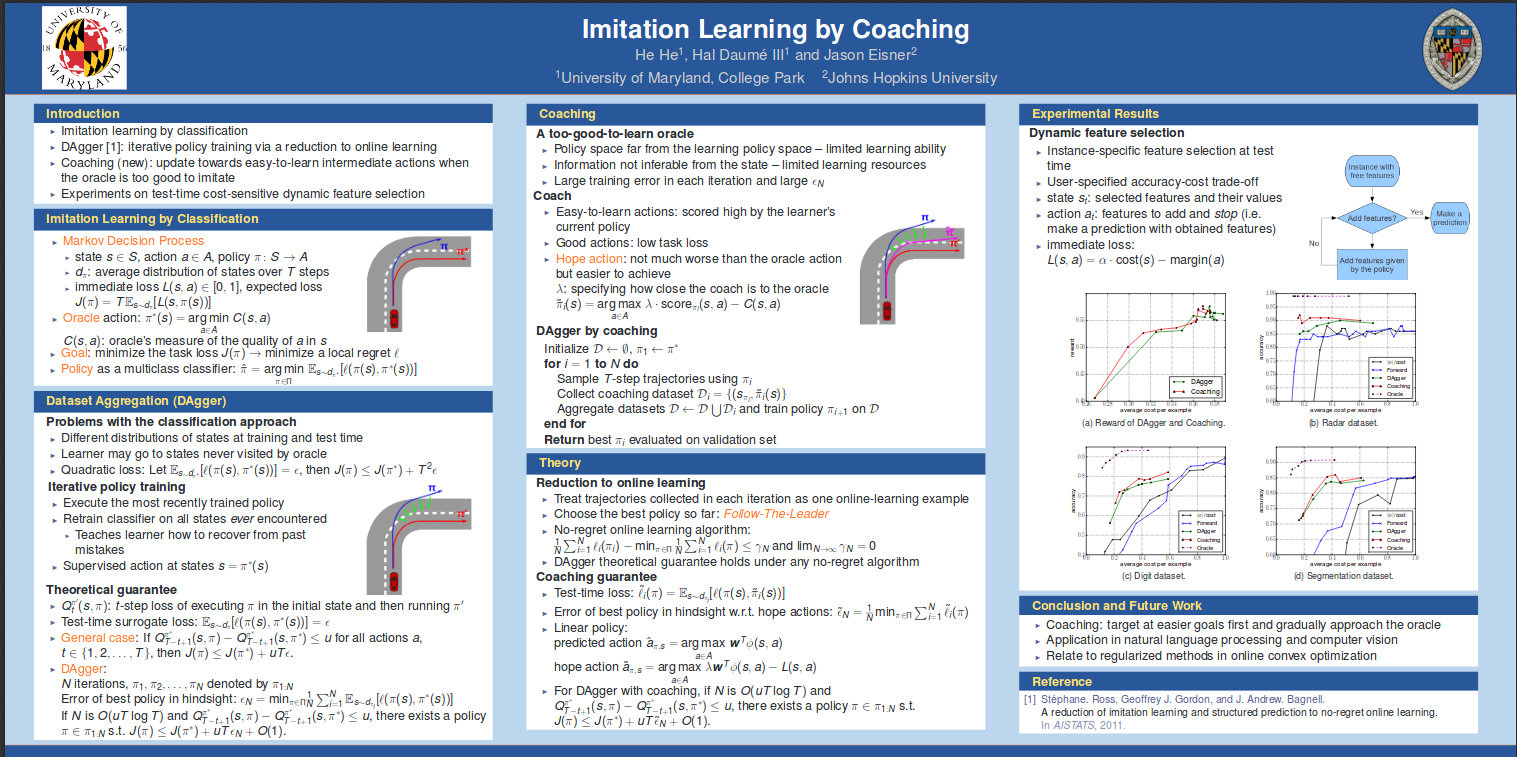

Imitation Learning by Coaching paper

Imitation Learning has been shown to be successful in solving many challenging real-world problems. Some recent approaches give strong performance guaran- tees by training the policy iteratively. However, it is important to note that these guarantees depend on how well the policy we found can imitate the oracle on the training data. When there is a substantial difference between the oracle’s abil- ity and the learner’s policy space, we may fail to find a policy that has low error on the training set. In such cases, we propose to use a coach that demonstrates easy-to-learn actions for the learner and gradually approaches the oracle. By a reduction of learning by demonstration to online learning, we prove that coach- ing can yield a lower regret bound than using the oracle. We apply our algorithm to cost-sensitive dynamic feature selection, a hard decision problem that consid- ers a user-specified accuracy-cost trade-off. Experimental results on UCI datasets show that our method outperforms state-of-the-art imitation learning methods in dynamic feature selection and two static feature selection methods

above picture -

Just Another DAgger Implementation

-

Imitation Learning with Dataset Aggregation (DAGGER) on Torcs Env

-

Research tools for autonomous systems in Python

- structure from motion and other vision

-

Open source Structure from Motion pipeline

-

Structure-from-motion-python

-

COLMAP - Structure-from-Motion and Multi-View Stereo

-

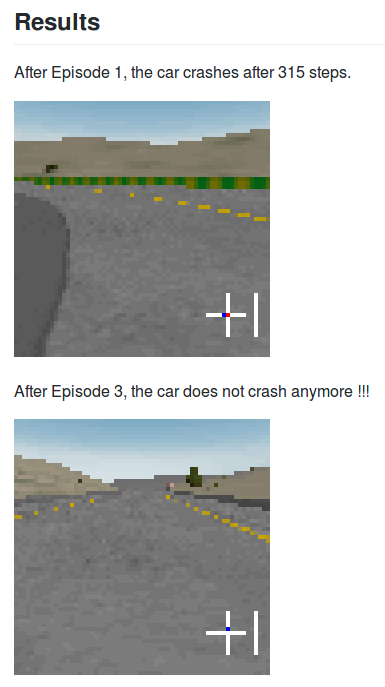

Using reinforcement learning to teach a car to avoid obstacles.

# Using reinforcement learning to train an autonomous vehicle to avoid obstacles

NOTE: If you’re coming here from parts 1 or 2 of the Medium posts, you want to visit the releases section and check out version 1.0.0, as the code has evolved passed that.

This is a hobby project I created to learn the basics of reinforcement learning. It uses Python3, Pygame, Pymunk, Keras and Theanos. It employes a Q-learning (unsupervised) algorithm to learn how to move an object around a screen (drive itself) without running into obstacles.

The purpose of this project is to eventually use the learnings from the game to operate a real-life remote-control car, using distance sensors. I am carrying on that project in another GitHub repo here: https://github.com/harvitronix/rl-rc-car

-

Driving a car in simulation with deep learning

-

End-to-end simulation for self-driving cars https://deepdrive.io

-

Deep learning project to automate vehicle driving in a simulator

-

Deep Reinforcement Learning: Playing a Racing Game

-

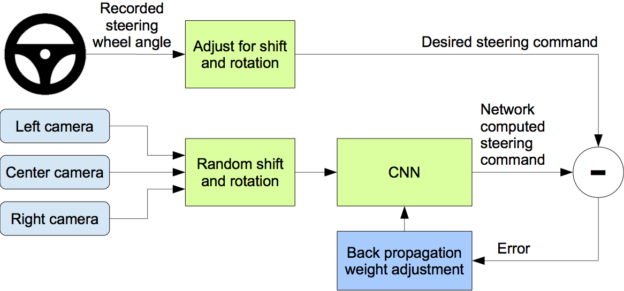

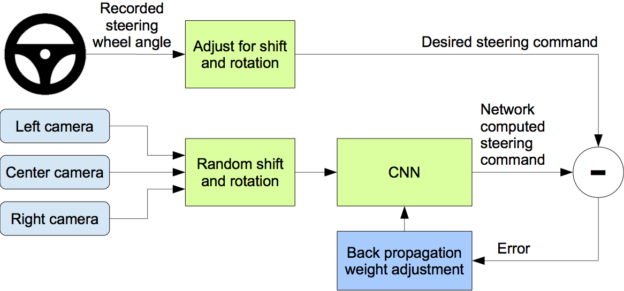

A TensorFlow implementation of this Nvidia paper: https://arxiv.org/pdf/1604.07316.pdf with some changes

-

End-to-End Deep Learning for Self-Driving Cars

-

CVPR 2017 Tutorial Geometric and Semantic 3D Reconstruction

Schedule:

Srikumar: Feature-based and deep learning techniques for single-view problems Depth estimation Semantic segmentation Semantic boundary labeling

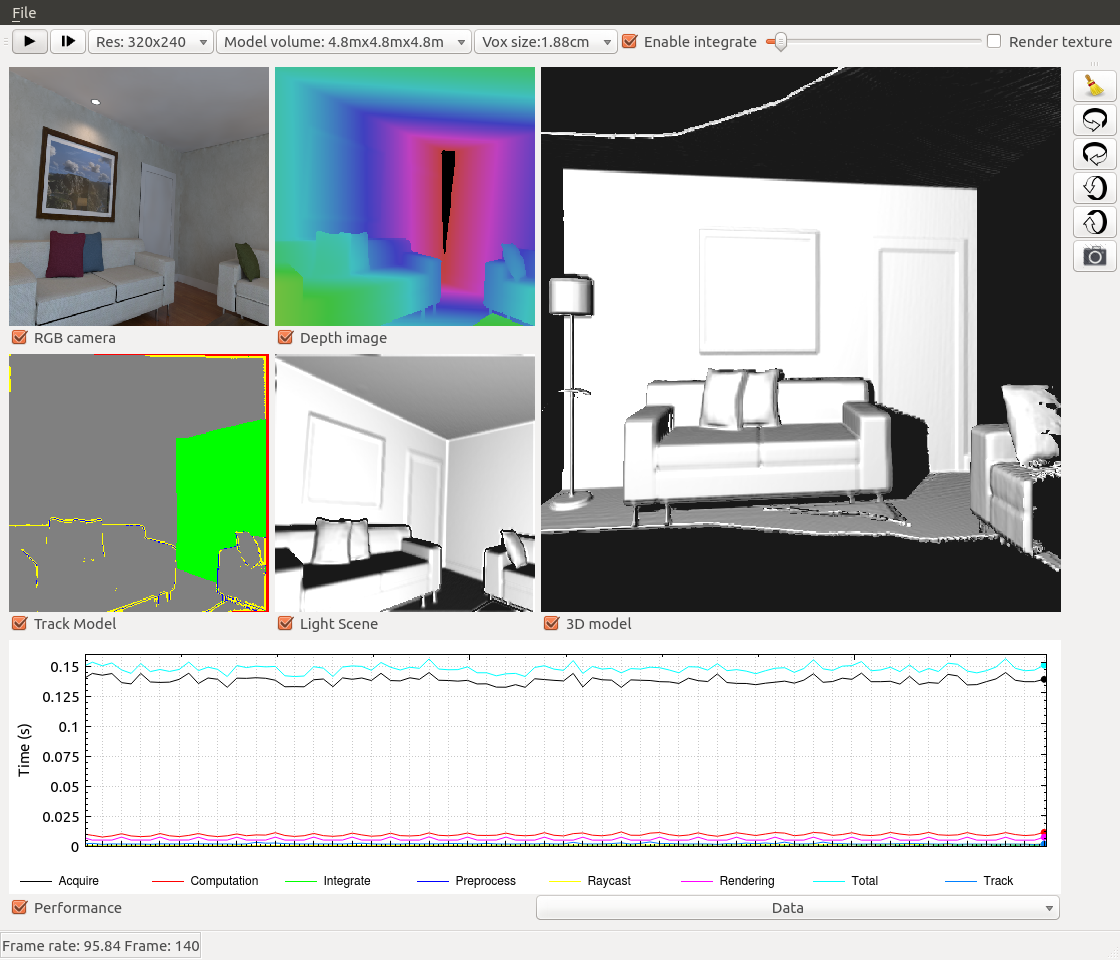

Jakob: Geometric visual-SLAM: Feature-based and Direct methods Sparse, Dense, Semi-dense methods Stereo, and RGB-D vSLAM Semantic SLAM

Sudipta: Semi-global stereo matching (SGM) and variants Discrete and continuous optimization in stereo Deep learning in stereo Efficient scene flow estimation from stereoscopic video

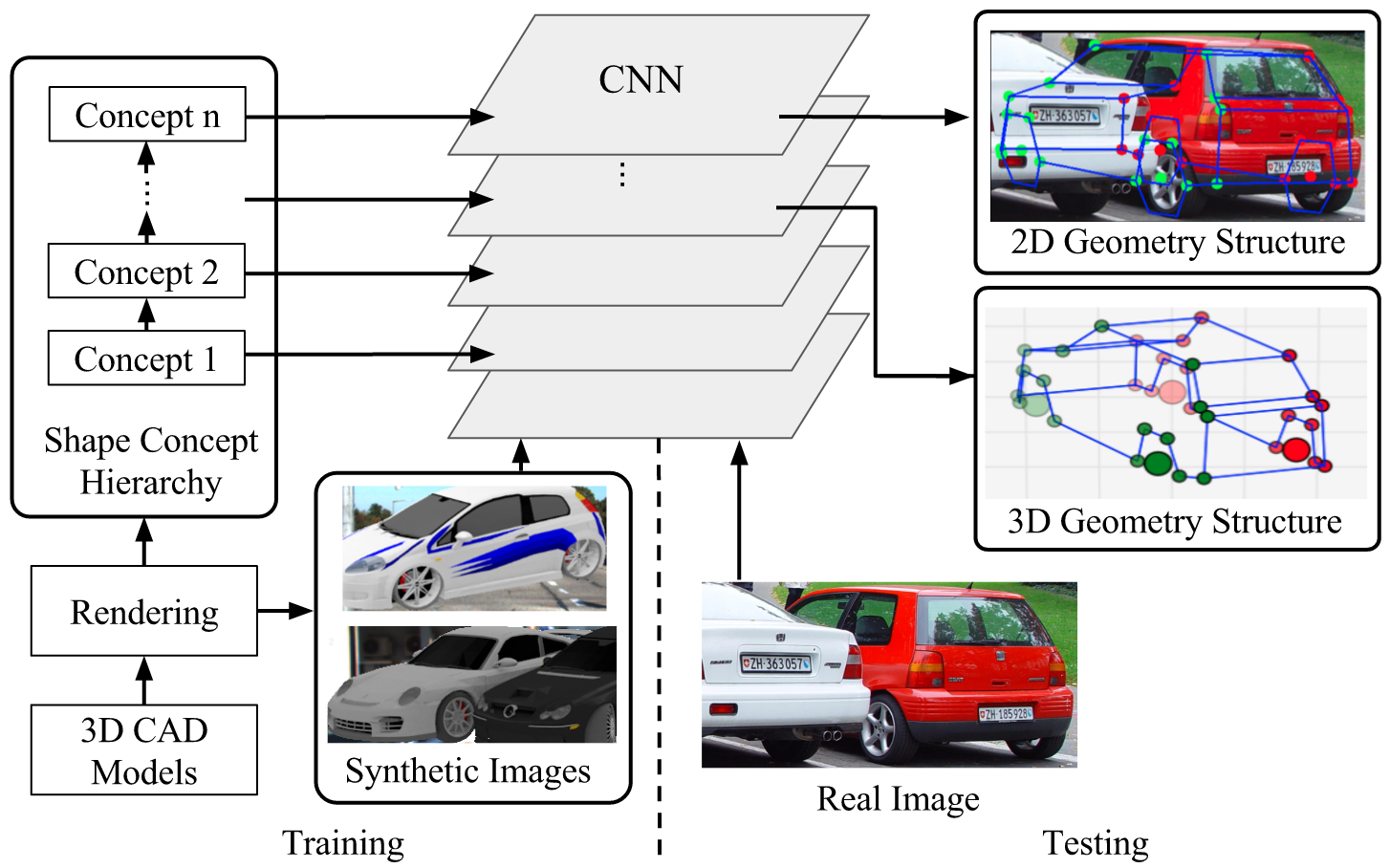

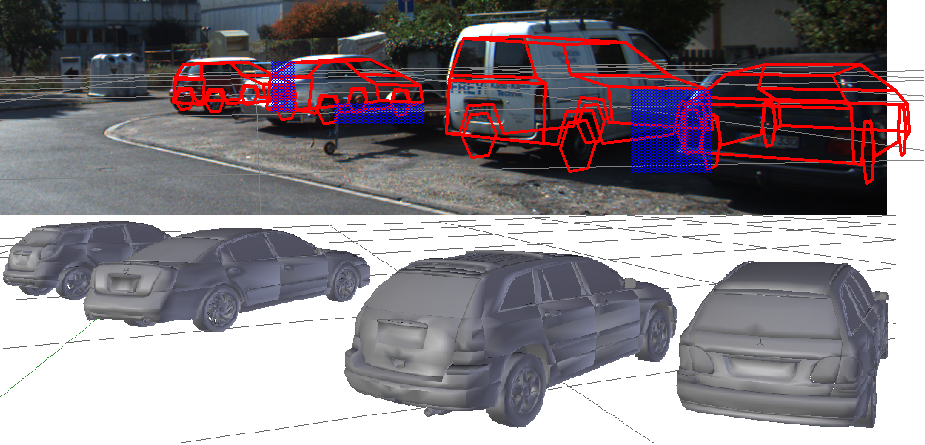

Christian: Volumetric Reconstruction, Depth Map Fusion Semantic 3D Reconstruction 3D Object Shape Priors

Christian: 3D Prediction using ConvNets

Various Visual-SLAM demos (if time permitted)

-

3D Machine Learning

Table of Contents

-

Vision Project

Self driving car in GTAV with Deep Learning

Self driving car in GTAV with Deep Learning