Direct Sparse Odometry SLAM

DSO

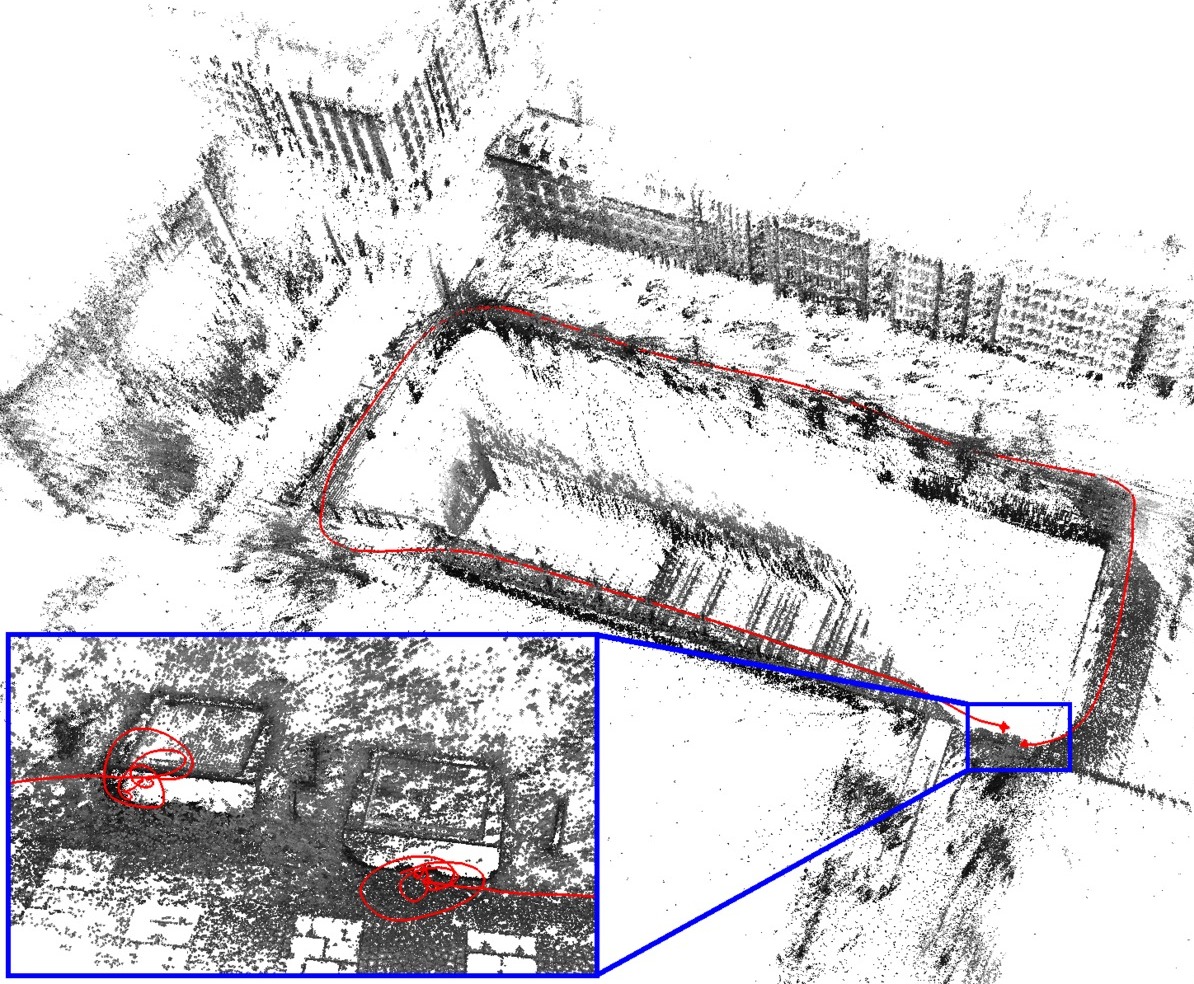

is a novel direct and sparse formulation for Visual Odometry. It combines a fully direct probabilistic model (minimizing a photometric error) with consistent, joint optimization of all model parameters, including geometry - represented as inverse depth in a reference frame - and camera motion. This is achieved in real time by omitting the smoothness prior used in other direct methods and instead sampling pixels evenly throughout the images. DSO does not depend on keypoint detectors or descriptors, thus it can naturally sample pixels from across all image regions that have intensity gradient, including edges or smooth intensity variations on mostly white walls. The proposed model integrates a full photometric calibration, accounting for exposure time, lens vignetting, and non-linear response functions. We thoroughly evaluate our method on three different datasets comprising several hours of video. The experiments show that the presented approach significantly outperforms state-of-the-art direct and indirect methods in a variety of real-world settings, both in terms of tracking accuracy and robustness.

Open-Source Code

The full source code is available on Github under GPLv3: https://github.com/JakobEngel/dso This main project is meant to run on datasets in the TUM monoVO dataset format (i.e., not with a live camera).

We also provide a minimalistic example (200 lines of c++ code) how to integrate DSO to work with a live camera, using ROS for video capture: https://github.com/JakobEngel/dso_ros. Feel free to your use-case / camera capture environment / ROS version.

Note that as for LSD-SLAM, we use a dual-licensing model; Please contact Jakob Engel or Prof. Daniel Cremers for details on commercial licensing.

Related Papers

- Direct Sparse Odometry, J. Engel, V. Koltun, D. Cremers, In arXiv:1607.02565, 2016

- A Photometrically Calibrated Benchmark For Monocular Visual Odometry, J. Engel, V. Usenko, D. Cremers, In arXiv:1607.02555, 2016